前言

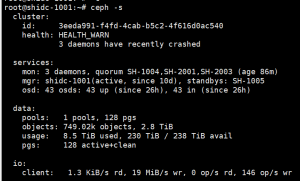

最近在折腾CEPH集群,测试环境是4台ceph物理节点,每台8~12个SATA/SAS机械硬盘,共43个OSD。性能远低于预期,目前正在调整配置中......

正好实测了一下性能,这里分享出来大家参考。对CEPH Pool的性能测试可以看前一篇:

文章目录[隐藏] 前言 集群配置 开始测试 前言 最近在折腾CEPH集群,测试环境是4台ceph物理节点,每台8~12个SATA/SAS机械硬盘,共43个OSD。性能远低于预期,目前正在调整配置中...... 正好实测了一下性能,这里分享出来大家参考。简要信息通过ceph -s即可获取: 集群配置 4 CEPH节点:SH-1001 SH-1003 SH-1004 SH-1005 2Manager:SH-1001 SH-1005 3Monitor:SH-1004 SH-2001 SH-2003 开始测试 内网测速 root@SH-1005:~# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.1.0.1, port 42784 [ 5] local 10.1.0.5 port 5201 connected to 10.1.0.1 port 42786 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 […]

集群配置

4 CEPH节点:SH-1001 SH-1003 SH-1004 SH-1005

2Manager:SH-1001 SH-1005

3Monitor:SH-1004 SH-2001 SH-2003

开始测试

内网测速

root@SH-1005:~# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.1.0.1, port 42784 [ 5] local 10.1.0.5 port 5201 connected to 10.1.0.1 port 42786 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 649 MBytes 5.44 Gbits/sec [ 5] 1.00-2.00 sec 883 MBytes 7.41 Gbits/sec [ 5] 2.00-3.00 sec 689 MBytes 5.78 Gbits/sec [ 5] 3.00-4.00 sec 876 MBytes 7.35 Gbits/sec [ 5] 4.00-5.00 sec 641 MBytes 5.38 Gbits/sec [ 5] 5.00-6.00 sec 637 MBytes 5.34 Gbits/sec [ 5] 6.00-7.00 sec 883 MBytes 7.41 Gbits/sec [ 5] 7.00-8.00 sec 643 MBytes 5.40 Gbits/sec [ 5] 8.00-9.00 sec 889 MBytes 7.46 Gbits/sec [ 5] 9.00-10.00 sec 888 MBytes 7.45 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 7.50 GBytes 6.44 Gbits/sec receiver -----------------------------------------------------------

创建测速pool

root@shidc-1001:~# ceph osd pool create rbdbench 128 128 pool 'scbench' created

生成测试镜像&格式化&挂载

生成镜像:

root@shidc-1001:~# rbd create image01 --size 1024 --pool rbdbench root@shidc-1001:~# sudo rbd map image01 --pool rbdbench --name client.admin /dev/rbd0

格式化:

root@shidc-1001:~# sudo /sbin/mkfs.ext4 -m0 /dev/rbd/rbdbench/image01 mke2fs 1.44.5 (15-Dec-2018) Discarding device blocks: done Creating filesystem with 262144 4k blocks and 65536 inodes Filesystem UUID: 46db8a31-781a-44e6-82c5-4a47ce801cb8 Superblock backups stored on blocks: 32768, 98304, 163840, 229376 Allocating group tables: done Writing inode tables: done Creating journal (8192 blocks): done Writing superblocks and filesystem accounting information: done

挂载:

root@shidc-1001:~# sudo mkdir /mnt/ceph-block-device root@shidc-1001:~# sudo mount /dev/rbd/rbdbench/image01 /mnt/ceph-block-device

测试写性能

root@shidc-1001:~# rbd bench-write image01 --pool=rbdbench

rbd: bench-write is deprecated, use rbd bench --io-type write ...

bench type write io_size 4096 io_threads 16 bytes 1073741824 pattern sequential

SEC OPS OPS/SEC BYTES/SEC

1 11664 11587.07 47460628.53

2 22800 11362.32 46540063.91

3 29472 9789.97 40099737.27

4 40784 10210.00 41820176.51

5 50704 10151.92 41582250.87

.........

26 236304 9258.06 37921014.79

27 241008 7920.16 32440974.16

28 252736 9267.71 37960557.42

29 259200 7964.64 32623166.14

elapsed: 29 ops: 262144 ops/sec: 8880.04 bytes/sec: 36372635.92

测试读性能

root@shidc-1001:~# rbd bench image01 --pool=rbdbench --io-type read

bench type read io_size 4096 io_threads 16 bytes 1073741824 pattern sequential

SEC OPS OPS/SEC BYTES/SEC

1 720 702.27 2876517.32

2 2256 1138.25 4662278.93

3 3904 1299.71 5323597.27

4 4496 1113.50 4560895.47

5 5040 999.98 4095910.57

.........

51 94816 2524.36 10339760.03

52 97312 2599.41 10647200.35

53 98976 2506.69 10267411.14

54 99584 2150.07 8806694.77

2020-12-13 22:21:55.694 7f6be77fe700 -1 received signal: Interrupt, si_code : 128, si_value (int): 0, si_value (ptr): 0, si_errno: 0, si_pid : 0, si_uid : 0, si_addr0, si_status0

elapsed: 54 ops: 99859 ops/sec: 1833.56 bytes/sec: 7510265.85

测试大文件写性能

root@shidc-1001:~# rbd bench image01 --pool=rbdbench --io-type write --io-size 1G --io-threads 1 --io-total 30G --io-pattern seq

bench type write io_size 1073741824 io_threads 1 bytes 32212254720 pattern sequential

SEC OPS OPS/SEC BYTES/SEC

4 2 1.36 1458842260.17

7 3 0.86 927208912.24

9 4 0.72 775352351.12

12 5 0.63 679848852.00

.........

61 26 0.43 465857602.60

63 27 0.43 464246208.75

66 28 0.43 463925277.26

68 29 0.43 466829866.23

elapsed: 70 ops: 30 ops/sec: 0.42 bytes/sec: 455115433.42

测试大文件读性能

root@shidc-1001:~# rbd bench image01 --pool=rbdbench --io-type read --io-size 1G --io-threads 1 --io-total 30G --io-pattern seq

bench type read io_size 1073741824 io_threads 1 bytes 32212254720 pattern sequential

SEC OPS OPS/SEC BYTES/SEC

2 2 2.64 2835492413.78

3 3 1.70 1826031411.91

4 4 1.42 1521692856.20

5 5 1.27 1360270044.25

.........

30 26 0.82 877785194.47

31 27 0.81 874924110.44

32 28 0.81 868694891.83

34 29 0.81 866451666.64

elapsed: 35 ops: 30 ops/sec: 0.85 bytes/sec: 909920936.74

测试4k小文件写性能

root@shidc-1001:~# rbd bench image01 --pool=rbdbench --io-type write --io-size 4096 --io-threads 512 --io-total 1G --io-pattern rand

bench type write io_size 4096 io_threads 512 bytes 1073741824 pattern random

SEC OPS OPS/SEC BYTES/SEC

1 8192 7798.99 31944659.49

2 11776 5896.13 24150552.29

3 14336 4783.32 19592498.81

4 16896 4343.15 17789541.35

5 19968 4082.78 16723070.27

.........

87 251392 2570.19 10527489.75

88 254464 2626.58 10758479.84

89 257024 2814.23 11527087.28

90 260096 2888.61 11831752.10

elapsed: 93 ops: 262144 ops/sec: 2802.14 bytes/sec: 11477553.81

测试4k小文件读性能

root@shidc-1001:~# rbd bench image01 --pool=rbdbench --io-type read --io-size 4096 --io-threads 512 --io-total 1G --io-pattern rand

bench type read io_size 4096 io_threads 512 bytes 1073741824 pattern random

SEC OPS OPS/SEC BYTES/SEC

1 8192 8242.20 33760061.34

2 12800 6642.54 27207826.90

3 18432 6314.50 25864178.94

4 22016 5543.16 22704775.17

5 24576 4938.45 20227890.67

.........

45 194048 7517.10 30790028.60

46 202752 7042.87 28847615.32

47 219648 8827.35 36156831.44

48 236032 10411.21 42644301.49

49 252928 14022.10 57434518.28

elapsed: 49 ops: 262144 ops/sec: 5265.06 bytes/sec: 21565700.96

用fio测试写性能

root@shidc-1001:~# nano rbd.fio #写入以下信息 [global] ioengine=rbd clientname=admin pool=rbdbench rbdname=image01 rw=randwrite bs=4k [rbd_iodepth32] iodepth=32

测试结果:

root@shidc-1001:~# fio rbd.fio

rbd_iodepth32: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=rbd, iodepth=32

fio-3.12

Starting 1 process

Jobs: 1 (f=0): [f(1)][100.0%][w=644KiB/s][w=161 IOPS][eta 00m:00s]

rbd_iodepth32: (groupid=0, jobs=1): err= 0: pid=1790285: Sun Dec 13 22:10:34 2020

write: IOPS=328, BW=1313KiB/s (1344kB/s)(1024MiB/798896msec); 0 zone resets

slat (nsec): min=1963, max=1638.0k, avg=26001.73, stdev=15423.64

clat (msec): min=3, max=1924, avg=97.49, stdev=123.43

lat (msec): min=3, max=1924, avg=97.51, stdev=123.43

clat percentiles (msec):

| 1.00th=[ 7], 5.00th=[ 13], 10.00th=[ 25], 20.00th=[ 48],

| 30.00th=[ 59], 40.00th=[ 68], 50.00th=[ 77], 60.00th=[ 84],

| 70.00th=[ 92], 80.00th=[ 102], 90.00th=[ 120], 95.00th=[ 249],

| 99.00th=[ 802], 99.50th=[ 919], 99.90th=[ 1011], 99.95th=[ 1036],

| 99.99th=[ 1435]

bw ( KiB/s): min= 104, max= 1976, per=100.00%, avg=1312.44, stdev=374.61, samples=1597

iops : min= 26, max= 494, avg=328.09, stdev=93.67, samples=1597

lat (msec) : 4=0.02%, 10=2.62%, 20=6.25%, 50=13.24%, 100=56.92%

lat (msec) : 250=15.97%, 500=2.38%, 750=1.41%, 1000=1.03%

cpu : usr=1.49%, sys=0.88%, ctx=194637, majf=0, minf=15383

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=1313KiB/s (1344kB/s), 1313KiB/s-1313KiB/s (1344kB/s-1344kB/s), io=1024MiB (1074MB), run=798896-798896msec

Disk stats (read/write):

dm-1: ios=8/35149, merge=0/0, ticks=4/16504, in_queue=16508, util=1.86%, aggrios=654/29239, aggrmerge=0/16137, aggrticks=551/17939, aggrin_queue=3804, aggrutil=2.30%

sda: ios=654/29239, merge=0/16137, ticks=551/17939, in_queue=3804, util=2.30%